HBA Queue Depth / Pending Exchanges

What is Queue Depth?

Storage Array Queue Depth is the maximum number of SCSI exchanges that can be open on a storage port at any one time. HBA Queue Depth is a setting on an individual Host Bus Adapter which specifies how many exchanges can be sent to a target or LUN at one time.

Why Incorrectly-Set HBA Queue Depths Are a Problem

Default HBA Queue Depth values can vary a great deal by manufacturer and version. They are often set higher than what is optimal for most environments. Setting Queue Depths too high can cause storage ports to be overrun or congested. This can lead to poor application performance and potentially to data corruption and loss of data. A common misunderstanding is that higher Queue Depth equates to higher performance, whereas in reality performance can decrease if Queue Depths are set too high.

If an HBA Queue Depth is set too low, it could impair the HBA’s performance and lead to underutilization of the storage port’s capacity. This occurs because the network will be underutilized and because the storage system will not be able to take advantage of its caching and serialization algorithms which can improve performance.

Queue Depth settings on HBAs can also be used to throttle servers so that the most critical servers are allowed greater access to the necessary storage and network bandwidth. If they are not set correctly, critical applications may have to wait for data while lower-priority tasks proceed instead. This can cause variable and erratic transaction latency.

A useful analogy to help understand Queue Depth is to consider a shared office printer. The size of an individual print job is analogous to the HBA queue. If one user is printing a 256-page print job and another user tries to print an 8-page job, the second user will have to wait for the 256-page job to complete before printing can start. This results in a very long time to wait for completion. If a rule is set that no print job can exceed 8 pages (Queue Depth of 8), then every user gets more fair access to the printer and the average time to complete the job is much lower.

A properly-configured lower-tier SAN can often provide greater performance and reliability than a more expensive higher-tier SAN that is poorly configured. One of the biggest factors in ensuring availability is ensuring that redundant systems are actually properly configured and working. Managing Queue Depth is the best way to prevent or minimize the severity of heavy load times in a SAN.

Storage manufacturers will reveal the number of simultaneous exchanges their front-end ports can handle, but will rarely reveal at what point performance actually starts to degrade. As the queue on the storage port approaches 50-60% saturation, the response times for each exchange remain fairly predictable and fast. Once the storage port queue begins to go beyond 60% utilized, the response time for each exchange goes up exponentially (at 60% a 10mS response time is normal, at 65% a 20mS response time is normal, at 80% saturation a 200mS response time is normal). Proper tuning of the HBA Queue Depth setting should be made with this in mind - the ultimate goal is to not push the storage port queue saturation beyond 50% utilization.

Required to identify: SAN Performance Probe hardware.

What are Common Causes of Incorrectly-Set HBA Queue Depths?

The most common cause of incorrectly-set HBA Queue Depths is utilizing the default settings provided by the HBA driver vendor. Industry defaults tend to be in the range of 32 to 256, significantly higher than optimal. Most settings have not taken into consideration the number of servers that are connecting to astorage port, the number of LUNs available on that port, or the Queue Depth limits for each storage array accessed by a particular HBA. Determining the optimal values requires the ability to measure aggregated Queue Depths in real time, regardless of the storage vendor or device. Another key factor is that the HBA Queue Depth setting is often outside of the control of the SAN and Storage Team, as it is implemented by the team managing the servers (who may not have the required knowledge to correctly set the parameter).

How to Spot an Incorrectly-Set HBA Queue Depth

By knowing the number of exchanges that are pending at any one time, it is possible to manage the storage Queue Depths. Pending Exchanges show how full the queues are actually getting. Often the performance is best and more predictable when there are low levels of queuing.

Without VirtualWisdom, the only way to confirm HBA Queue Depth settings is to check the HBA parameter setting on each server, which is a time-consuming task and only provides a point-in-time check.

The VirtualWisdom SAN Performance Probe Maximum Pending Exchanges metric records the maximum number of Initiator-Target-LUN exchanges in progress during any time interval. This value cannot exceed the HBA Queue Depth, so it will typically indicate the current HBA Queue Depth setting.

The first step is to baseline the environment to determine which servers have optimal settings and which ones are set too high or too low. A good report to run lists the Initiator, Initiator Name and Maximum Pending Exchanges, sorted descending by Maximum Pending Exchanges.

Unless storage ports have been dedicated to a server, the optimal HBA Queue Depth settings are in the range of 2 to 8. A threshold can be set so that any HBA with a Maximum Pending Exchanges value greater than 8 (for example) is flagged in red for further investigation.

HBAs connected to dedicated storage ports can utilize much higher Queue Depths. The storage vendor should be consulted for recommended maximums in this situation.

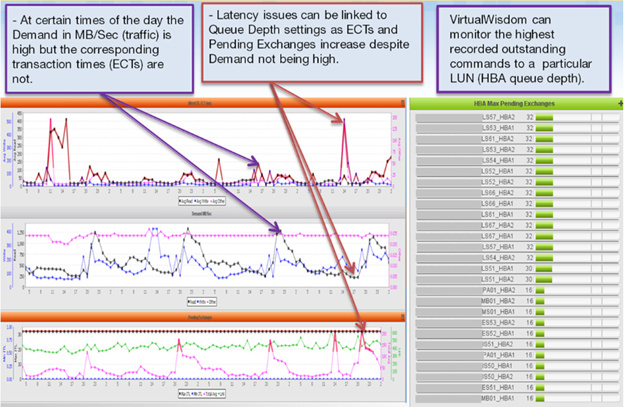

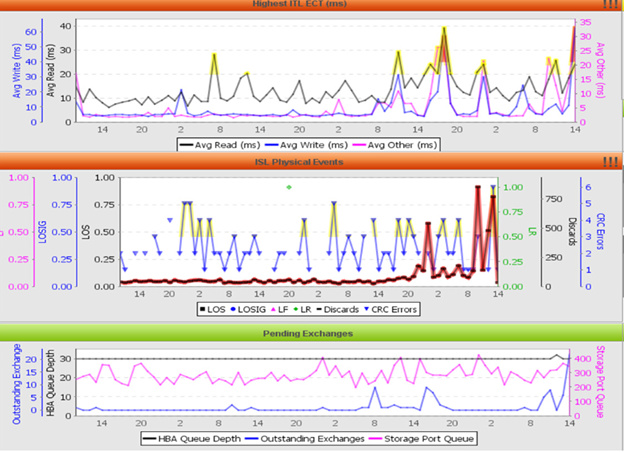

Correlating HBA Queue Depth with Other Events

Setting Queue Depths too high (the most common problem) can cause storage ports to be overrun or over-saturated:

Numeric representation of the key metrics in the example on the previous page:

The first indication of a Queue Depth problem will usually manifest itself as intermittent slowdowns on read response times. Typically, when a host has an extremely-high Queue Depth setting, its own response times will suffer, as well as any other host on that storage port. Since SCSI traffic is usually very bursty in nature, these initial symptoms will be sporadic and infrequent. As the storage port queue fills up more and more, the response time impact will grow. Eventually, the storage port response times will become incredibly high (500-1000mS), and the queue will become saturated. When this occurs, the storage port will answer any additional exchanges with a ‘queue full’ status message. There is no standard for how HBAs should handle this response, and most simply re-transmit the exchange, compounding the issue.

How to Resolve Incorrectly-Set HBA Queue Depths

The first step toward resolving Queue Depth problems is to baseline the environment, in order to determine which Initiators have optimal Queue Depth settings and which are set too high or too low. To do this, run the report described earlier (Initiator, Initiator Name and Maximum Pending Exchanges, sorted descending by Maximum Pending Exchanges with values greater than 8 highlighted in red).

This report can be used to compare the settings on the servers to the relative values of the application(s) which they support. Improperly set Queue Depths can lead to unpredictable performance and even brownouts. Servers that share storage ports should have a lower setting to avoid random slowdowns due to excessive load from a single device. The most critical systems should be set to higher Queue Depths than those which are less critical. Dedicated storage ports can handle higher Queue Depth settings in order to achieve performance benefits.

For the HBA Queue Depth setting on servers, one should consider these points:

- Are the top Initiators hosting your highest-priority applications?

- Conversely, are the Initiators with a low Queue Depth setting hosting the lowest-priority applications? If so, the small Queue Depth might make sense. If not, perhaps they are being inappropriately constrained (throttled) by the Queue Depth configuration.

- Load-balancing HBAs within the same server typically have the same Queue Depth setting. The Storage Virtualizer automatically manages the Storage-Virtualizer-to-Storage pending exchanges.

Where dedicated storage ports are not in use, Queue Depths should be set between 2 and 8. A setting of 8 should be reserved for the more latency-sensitive applications. Dedicated storage ports should typically have settings as high as possible. This will allow more HBAs to request only the amount of data that they can consume, as well as allow more hosts timely access to the data that is being requested.

While these recommendations are for typical environments that we have observed, it is important to also monitor the latencies and determine what is best for a particular environment and application. Furthermore, a review of maximum Queue Depths should be made to ensure congestion occurs as infrequently as possible. Queue Depth settings should stay within a maximum vendor-specified range, however.

Ongoing Monitoring

Once the proper Queue Depth settings have been determined and established for an environment, it is a good idea to create an alarm for any HBAs that are added to the SAN which don’t fall within the recommendations. It is also a good idea to create a filter in advance to exclude any servers which may not have to follow the policy.

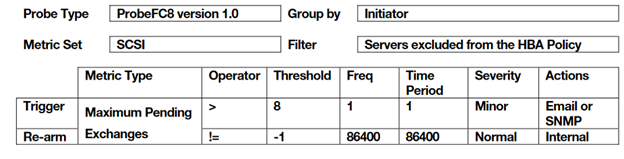

Note that the following configuration uses a time-based re-arm. This works unless you are using a Rover on the link. The re-arm is set by entering a condition which is always true, along with a frequency and time period that specifies the desired re-arm time. 24 hours (60 sec/min * 60 min/hour * 24 hours = 86400 seconds) in this case, assuming a 1-second interval: